As data continues to grow across industries, from cloud computing to social media, businesses need more than just traditional storage solutions. While we might consider simply expanding the capacity of physical disks to meet growing storage needs, this approach presents several challenges. Upgrading or downgrading disk capacity is not only cumbersome but also involves significant downtime and the risk of data corruption. Additionally, individual disks are a single point of failure, leading to potential data loss and decreased availability.

The solution to these challenges lies in distributed file systems, which form the backbone of modern data infrastructure.

What are distributed file systems?

Distributed file systems are systems that allow data to be stored across multiple storage nodes and locations while appearing to users and applications as a single, unified system.

Let’s explore and understand the key characteristics of distributed file systems through real-world application scenarios.

Scalability

Distributed file systems offer the ability to manage vast amounts of data across multiple storage devices. This scalability can be considered on both short-term and long-term scales.

Short-term scalability

Short-term scalability refers to the system’s ability to handle sudden increases in data and user load by adding more storage nodes (scale-out). Efficiently, it also includes reducing resources when the load decreases (scale-in).

Example: A social media platform experiences a surge in user activity during peak hours, resulting in a rapid increase in data generated from user posts, images, and videos. With a distributed file system, the platform can seamlessly scale its storage infrastructure by adding more nodes to accommodate the growing volume of user data, ensuring users can continue to upload and access content without delays or storage limitations, even during periods of high traffic.

Long-term scalability

Long-term scalability involves planning for the growth in storage needs as a company expands, ensuring that the system can handle increasing data volumes over time.

Example: An e-commerce company starts small but anticipates significant growth over the next decade. By implementing a distributed file system, the company can begin with a modest number of nodes and incrementally add more as its customer base and product catalog expand. This long-term scalability ensures that the system can handle increasing data volumes from product listings, customer data, and transaction records as the company grows.

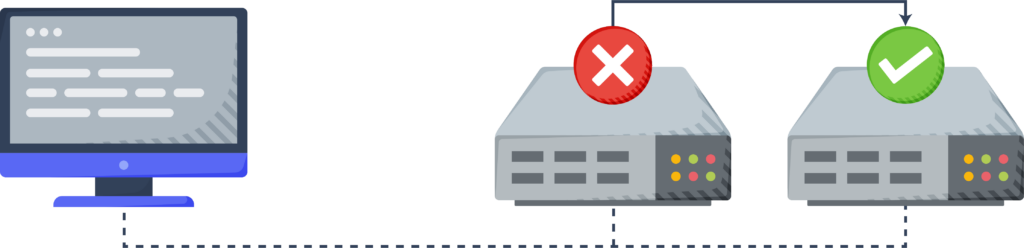

Fault tolerance and availability

Distributed file systems ensure high availability and fault tolerance through data replication and redundancy. By storing copies of data across different nodes, they mitigate the risk of data loss due to hardware failures and enhance overall system resilience and uptime.

Example: An online banking platform stores sensitive customer data, including account balances, transaction histories, and personal information. To ensure continuous access to banking services, the platform uses a distributed file system with data replication and redundancy techniques. In the event of node failures, hardware faults, or network disruptions, the system can withstand these issues without losing critical customer data, maintaining the integrity and availability of banking services.

Data accessibility

Distributed file systems enable seamless access to data from multiple locations, allowing users to retrieve data from the nearest server. This is especially important for organizations with geographically dispersed teams and customers, as it reduces latency and improves overall system performance.

Example: A video streaming service needs to deliver content to users around the globe. By using a distributed file system, the service can store copies of popular videos in data centers located in various regions. When a user requests a video, the system directs the request to the nearest data center, reducing latency and providing a smooth streaming experience. This distribution of data also helps balance the load across multiple nodes, enhancing overall throughput and minimizing bottlenecks.

Cost efficiency

Distributed file systems allow for cost-effective storage solutions by utilizing commodity hardware and distributed computing principles. This helps in reducing infrastructure costs while maintaining high performance.

Example: A real-world example comes from Facebook’s Haystack, a photo storage system designed to handle billions of images. By moving to a distributed storage system using commodity hardware, Facebook was able to achieve substantial cost and performance efficiencies. According to the paper “Finding a Needle in Haystack: Facebook’s Photo Storage,” each usable terabyte in Haystack costs approximately 28% less and processes about 4 times more reads per second compared to an equivalent terabyte on a traditional NAS appliance.

Distributed file systems come with their own set of challenges, particularly in ensuring consistency among multiple replicas. When designing such systems, trade-offs (as stated by the CAP theorem) must be made between various requirements, prioritizing those that are essential over those that are merely desirable. For instance, some applications may demand strong consistency guarantees, ensuring that all users see the same data at the same time, while others may prioritize strict availability, allowing users to access the system even if some data is temporarily inconsistent.

To address these varying requirements, many distributed file systems are designed with configurability in mind. They offer the option to operate under a relaxed consistency model, enabling developers to choose the level of consistency needed for their specific use cases. This configurability allows organizations to balance the trade-offs between consistency and availability based on their operational needs, ensuring a more tailored approach to data management.

Now that we have discussed the core characteristics of distributed file systems let’s explore how these principles are applied in distributed file systems developed by leading tech companies to solve real-world challenges. We’ll examine the design of these systems, the specific requirements they meet, the design trade-offs they make, and how they adapt to evolving needs.

Real-world examples

We will cover the distributed file systems developed by tech giants like Google, Apache, and Facebook. Each has designed its distributed file system to meet the specific storage requirements of their respective applications.

Google: Google File System (GFS)

GFS was developed by Google to handle the growing data processing demands of its applications. It serves as the backbone of Google’s infrastructure, supporting a wide range of applications and services, including:

- Web indexing: GFS stores and processes the vast amounts of data collected by Google’s web crawlers for indexing and search purposes.

- Data analytics: GFS powers Google’s data analytics platforms, enabling the processing of large-scale datasets for business intelligence and decision-making.

- Log processing: GFS is used to store and analyze log data generated by various Google services, helping to identify trends, monitor system performance, and troubleshoot issues.

Architecture of GFS

GFS comprises three main components: the GFS client library, the manager node, and chunkservers.

GFS client library: Clients interact with the GFS through the GFS client library, abstracting the underlying file system operations. It communicates with the manager node to obtain metadata information and directly with chunkservers to read or write data chunks. It is also responsible for data caching, error handling, and retry logic.

Manager node: The manager node acts as the central coordinator of the file system. It manages metadata such as file namespaces, access control information, and the mapping of files to data chunks. The manager node also handles operations such as chunk creation, replication management, and load balancing across chunkservers.

Chunkservers: Chunkservers are responsible for storing data in the form of fixed-size chunks, typically 64 MB in size. These servers manage the actual storage of data on local disks and handle read and write requests from clients. Each chunk is replicated across multiple chunkservers (three replicas by default) to ensure fault tolerance and data durability.

Design choices and compromises

The design of GFS reflects several conscious choices and compromises:

- The centralized manager node design: The centralized manager node simplifies metadata management but introduces a single point of failure. This design choice was made to enhance the efficiency in metadata handling but at the cost of potential bottlenecks. GFS addresses this by implementing periodic checkpoints and log-based recovery, allowing the master node to recover quickly from failures.

- High throughput vs. latency: GFS is optimized for high throughput, particularly for sequential I/O patterns. This design choice supports large-scale batch processing tasks efficiently. However, it compromises latency, making GFS less suitable for small files, random reads, and real-time workloads.

- Relaxed consistency model: GFS implements a relaxed consistency model optimized for append operations, which are the most frequent write patterns. This model simplifies data management and improves performance for append-heavy workloads. In exchange for improved performance, GFS’s relaxed consistency model can lead to temporary inconsistencies. For example, during concurrent appends, clients might see different versions of the file until the system stabilizes. This can result in some clients reading stale data. There is more work on application developers who use GFS to handle potential inconsistencies.

Google: Colossus

Colossus is a distributed file system developed by Google as an evolution and replacement for its earlier system, GFS. It is designed to address the limitations of GFS and to meet the increasing demands of Google’s infrastructure and services. What distinguishes Colossus from GFS are its following characteristics:

- Scalable metadata model: One of the key enhancements in Colossus is adopting a distributed metadata model. Unlike GFS, which relied on a single manager node for metadata management, Colossus distributes metadata operations across multiple metadata servers. This distributed approach improves scalability, fault tolerance, and performance by parallelizing metadata operations and reducing the load on individual servers.

- Support for diverse workloads: Colossus is designed to support a wide range of workloads, including batch processing, analytics, and real-time data processing. It provides high throughput and low latency for both sequential and random access patterns, making it suitable for diverse application requirements within Google’s ecosystem.

Architecture of Colossus

Below is a Colossus architecture diagram, which consists of several components.

Client library: The client library serves as the interface through which applications or services interact with Colossus. It handles various functionalities such as file read/write operations, data encoding for performance optimization, and software RAID for data redundancy. Applications built on top of Colossus can use different encodings to fine-tune performance and cost trade-offs for different workloads.

Colossus control plane: The control plane is the foundation of Colossus, consisting of a scalable metadata service. It manages and coordinates the operations of various components within Colossus.

- Curators: The control plane includes multiple curators, which are responsible for storing and managing file system metadata. Clients communicate directly with curators for control operations such as file creation, ensuring efficient and scalable metadata management. The curators can scale horizontally to accommodate increasing demands and workload diversity.

- Metadata database (BigTable): Curators store file system metadata in Google’s high-performance NoSQL database, BigTable. Storing metadata in BigTable enables Colossus to achieve significant scalability improvements compared to GFS. This scalability allows Colossus to handle much larger clusters and accommodate the storage needs of Google’s various services and applications.

- Custodians: Custodians are background storage managers within Colossus. They ensure the durability, availability, and efficiency of stored data by handling tasks such as disk space balancing, RAID reconstruction, and overall system maintenance to maintain data integrity and system performance.

D file servers: D file servers are responsible for storing and serving data to clients. They facilitate direct data transfer between clients and storage disks, minimizing network hops and optimizing data transfer efficiency. This direct data flow helps reduce latency and improves overall system performance.

The transition from Google File System to Colossus represents a significant evolution in Google’s storage infrastructure, enabling the company to continue scaling its data storage and processing capabilities to meet the demands of its growing user base and services.

Apache: Hadoop Distributed File System (HDFS)

Hadoop Distributed File System (HDFS) is designed to store and manage large volumes of data across clusters of commodity hardware. It is a core component of the Apache Hadoop ecosystem and is optimized for handling big data. HDFS divides files into fixed-size blocks, typically 128MB, and replicates these blocks across multiple DataNodes for fault tolerance and high availability. It supports distributed data processing frameworks like MapReduce, enabling organizations to efficiently analyze and derive insights from massive datasets. HDFS emphasizes data locality, where compute tasks are executed on the same nodes where the data resides, minimizing network traffic and improving performance.

The architecture of HDFS closely resembles that of GFS. The manager node in GFS is called the NameNode in HDFS; the chunkservers in GFS are called the DataNodes in HDFS; and the chunks in GFS are called blocks in HDFS.

Each GFS and HDFS cluster consists of thousands of storage nodes, each with terabytes of storage capacity. This allows a single GFS or HDFS cluster to effectively accommodate petabytes of data storage requirements. While HDFS and GFS share similarities in their distributed architecture and key features, there are differences between the two systems regarding their origin and ownership and the consistency model. The table below highlights these differences.

HDFS vs. GFS

| Point of Difference | GFS | HDFS |

|---|---|---|

| Origin and Ownership | GFS was developed internally by Google to meet the company’s storage needs. While details about GFS have been published in research papers, it remains a proprietary system owned and used exclusively by Google. | HDFS is an open-source project developed as part of the Apache Hadoop ecosystem. It is maintained and governed by the Apache Software Foundation and is freely available for anyone to use and contribute to. |

Consistency Model | GFS implements a relaxed consistency model optimized for append-heavy workloads. It prioritizes high throughput for append operations, sacrificing strict consistency guarantees. | HDFS follows a consistency model similar to traditional local POSIX file systems, emphasizing one-copy-update semantics. |

| Workload Support | GFS is designed to efficiently handle append-heavy workloads, allowing multiple clients to append data to the same file concurrently. | HDFS supports various workloads, including batch processing and analytics, while ensuring strong consistency. |

| Visibility of Changes | In GFS, changes to files might not be immediately visible to all clients, and updates propagate gradually, leading to delayed visibility. | HDFS provides immediate visibility of changes upon completion of operations like file creation, update, or deletion, ensuring consistency across the cluster. |

Meta’s distributed file systems: From Haystack to Tectonic

As Meta’s user base and data needs exploded, robust and scalable storage solutions became paramount. Here’s a breakdown of their journey, starting with the early systems:

Haystack

Haystack is a specialized photo storage system developed by Meta to efficiently store and serve the billions of photos uploaded by users daily.

The need for Haystack arose from the limitations of Metas existing NFS-based photo storage infrastructure, which faced performance bottlenecks due to the overwhelming metadata generated by each uploaded photo, stored in four sizes (large, medium, small, and thumbnail). This overwhelming metadata led to increased I/O operations, hindering the system’s scalability and performance.

Haystack was developed to address these challenges and provide a scalable, efficient solution for storing and serving photos on Meta by reducing the amount of metadata that could fit in the in-memory data structure, cutting down on I/O tasks, and boosting overall system performance.

Architecture of Haystack

The following is an architecture diagram of the Haystack photo storage system:

HTTP server: The HTTP server acts as the interface between clients and the Haystack system, handling client requests related to photo uploads and viewing.

Photo store server: This component manages the storage and retrieval of photos within Haystack. It is responsible for indexing photos for efficient access and managing the metadata associated with each photo.

Haystack object store: The core storage component of Haystack, the object store stores photo data in a log-structured (append-only) format. It consists of two main files: the haystack store file containing the actual photo data (needles) and an index file that aids in the efficient retrieval of photos.

File system: This component provides file management functionality within Haystack, organizing storage space into directories and files. It handles low-level disk operations and manages data storage on physical volumes.

Storage: Physical hardware utilized by Haystack to store photo data. It typically comprises commodity storage blades with specific hardware configurations, including CPUs, memory, RAID controllers, and SATA drives.

F4: Warm blob storage

The development of Meta’s warm BLOB storage system, F4, was driven by the need to efficiently manage the increasing volume of Binary Large Objects (BLOBs) in their storage infrastructure. With the growth of BLOBs, the traditional storage system, Haystack, became less efficient in terms of storage utilization. To address this inefficiency, Facebook analyzed the access patterns of BLOBs and identified two temperature zones: hot BLOBs accessed frequently and warm BLOBs accessed less often. Hot blobs are stored on Haystack.

F4 was specifically designed to handle warm BLOBs, aiming to improve storage efficiency by reducing the effective replication factor of these less frequently accessed data while ensuring fault tolerance and maintaining adequate throughput.

F4 achieves storage efficiency for warm BLOBs by using a special type of storage unit called a cell. These cells store data using erasure coding, which reduces the number of physical copies needed compared to full replication. While this takes longer to recover from failures, it’s acceptable for warm BLOBs, which are accessed less often.

To maintain some level of fault tolerance, F4 replicates critical data like indexes within the cell and uses erasure coding with redundancy for the actual BLOB data.

Tectonic file system

Tectonic is Meta’s current exabyte-scale distributed file system designed to address the limitations of Haystack and F4. As Meta’s data storage demands grew and diversified, managing separate systems (Haystack for hot data and F4 for cold data) became complex. Tectonic emerged to address these challenges:

- Consolidation: It unifies storage for various data types (photos, videos, posts) under a single system, eliminating the need for separate solutions like Haystack and f4.

- Scalability: Tectonic’s architecture allows for horizontal scaling to accommodate Meta’s ever-increasing data storage requirements.

- Efficiency: It optimizes resources by eliminating the overhead of managing multiple systems.

- Versatility: It caters to diverse workloads by enabling tenant-specific optimizations for different Meta services.

Architecture of Tectonic

Tectonic utilizes a client-driven, microservice-based architecture with a layered approach, as shown in the image below.

Client library: This component acts as a client-side proxy. It translates file system API calls initiated by applications (read, write, delete) into remote procedure calls (RPCs) for interaction with the metadata and chunk store. Essentially, the client library serves as the intermediary between applications and the distributed file system.

Metadata store: This crucial component efficiently manages file system metadata using a sharded key-value store called ZippyDB. Sharding separates metadata into distinct layers (name, file, block) for independent scaling based on workload. This ensures critical metadata management keeps pace with the ever-changing data landscape.

Stateless background services: Running on individual cluster nodes, these stateless services perform essential tasks in the background:

- Garbage collector: Reclaims unused storage space by identifying and reclaiming data associated with deleted files or expired chunks.

- Rebalancer: Maintains an optimal distribution of data across storage devices within the cluster for efficient performance.

- Stats service: Gathers statistics on various system aspects, such as storage utilization, data access patterns, and background service performance. This data empowers administrators to optimize resource allocation and ensure system health.

- Disk inventory: Monitors disk space to optimize resource allocation and prevent bottlenecks.

- Block repair/scan: Likely focuses on data integrity within the chunk store, detecting and repairing corrupted data blocks or proactively scanning for potential issues.

- Storage node health checker: Oversees the health and functionality of individual nodes within the cluster, identifying and addressing potential issues to ensure uninterrupted operation.

Chunk store: Responsible for storing, serving, and managing the actual data chunks or blocks of files.

Key features of Tectonic

- Single unified system: Manages all data types, eliminating the need for separate solutions.

- Horizontal scalability: Easily scales to accommodate growing data volumes.

- Microservices architecture: Enables flexibility and independent scaling of components.

- Tenant-specific optimizations: Allows Meta services to fine-tune performance for their specific workloads.

- Exabyte-scale storage: Capable of handling massive data volumes.

Use cases of Tectonic

- User photos and videos: This was a core function of Haystack, and Tectonic seamlessly carries over this functionality. It efficiently stores and retrieves the massive amount of photos and videos uploaded by Facebook users.

- Social network data: Tectonic manages the ever-growing data associated with Meta’s core functionality—user profiles, connections, posts, comments, and likes. This data requires efficient storage and retrieval to power the social networking experience.

- Application data: Facebook offers various applications beyond core social networking features. Tectonic caters to the storage needs of these applications, ensuring efficient handling of their specific data types.

- Log data: The vast amount of log data generated by Meta’s servers and applications needs to be stored and managed. Tectonic’s scalability and ability to handle diverse workloads make it suitable for this purpose.

Conclusion

Exploring distributed file systems offers invaluable insights into the design principles and engineering considerations essential for building robust, scalable, and efficient distributed systems. From Google File System (GFS) to Facebook’s Tectonic, each distributed file system showcases key architectural decisions and trade-offs made to address the challenges of storing and processing massive volumes of data in distributed environments. The evolution of distributed file systems highlights the iterative nature of system design, emphasizing the need for continuous improvement, adaptation to changing requirements, and learning from real-world usage.

If you want to study distributed systems in depth—from learning how to design your own systems to understanding the design principles of real-world distributed systems like the consistency model of the Google File System (GFS) and the role of consensus algorithms such as Paxos in acheiving consistency among replicas—then the following courses are highly recommended for you.